User Tools

This is an old revision of the document!

Table of Contents

SLAM (Page still under construction)

Simultaneous Localization and Mapping

Overview

In robot navigation, a SLAM algorithm is used to construct a map of the robot's environment, while simultaneously locating the robot within that map. There are many different SLAM algorithms, but we are currently using a visual based system using the sub's right and left cameras. This allows us to link the system to Object Detection.

The specific system we are using is ORB-SLAM2, an open source feature based visual slam system which we modified for the sub.

The algorithm works by detecting features (such as edges and corners) in an image, and locates them in space using triangulation with other known map points.

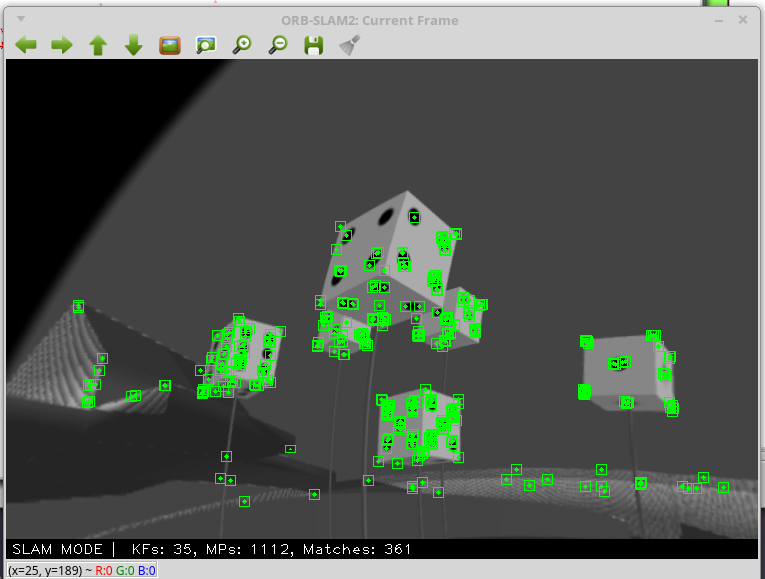

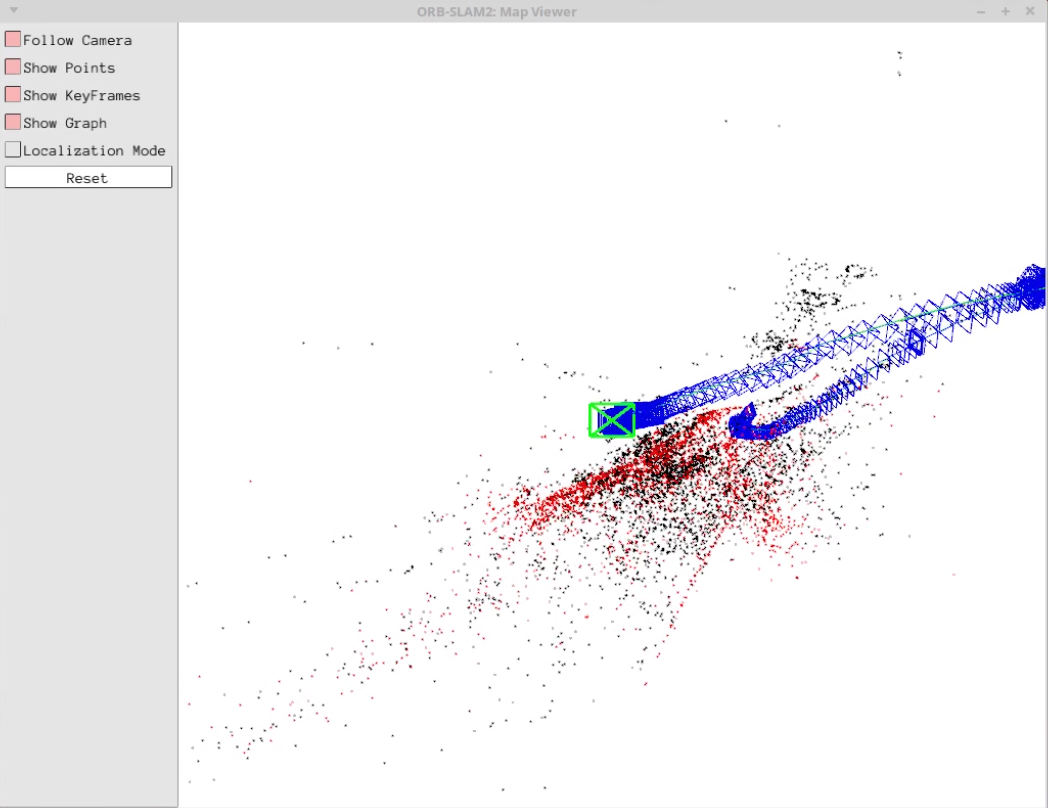

The sub in simulated environment.

The sub in simulated environment.

The view of a single keyframe with detected map points.

Structure

The SLAM algorithm is complex, but it links to the rest of the sub's system through a single node at ~/ros/src/robosub_orb_slam/src/ros_stereo.cc. The node:

Subscribes to:

- /camera/left/image_raw - collects image data from left robosub camera.

- /camera/right/image_raw - collects image data from right robosub camera.

Publishes to:

- /SLAMpoints - the 3d location of the map points in space, and the 2d location of the map points on each image frame.

How to Run

$ roslaunch robosub_orb_slam slam.launch use_viewer:=True

How it Works

ORB-SLAM2 is a feature based algorithm that takes keyframes from video output and extracts keypoints or features (such as corners), and uses them to establish location of the sub and its surroundings.

It consists of three main modules: